I was intrigued by the results of the two polls I ran in AI as a consequence of last night's video on that subject.

The video was just 2 minutes and 42 seconds long - as we try to make those we put out later in the day quite short, but it did, I think, get near to the nub of the subject, with a focus on jobs and the choices we can make on our relationship with AI.

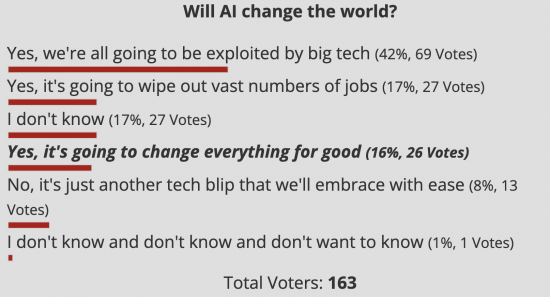

This was the poll finding on this blog:

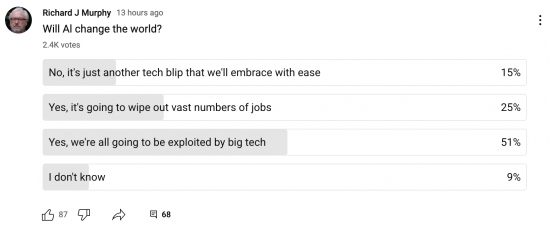

On YouTube, we could only offer four questions, and so two questions got dropped. This was the result there, so far (with more than 60% of viewers voting):

The big fear is not, apparently, for jobs, as I expected to dominate, but is instead about exploitation. That helps me frame my own reaction to this issue, as it helps me understand what people are thinking. This genuinely helps.

That said, I noticed other poll data on AI published by Substack yesterday. They had polled 2,000 of their writers and found interesting data. As they noted:

Out of about 2,000 surveyed publishers:

- 45.4% said they're using AI

- 52.6% said they're not

- 2% were unsure

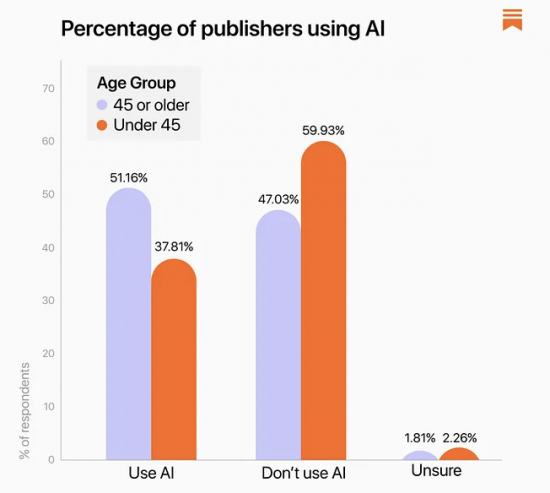

The data on the age of those using AI was interesting:

Older creators on Substack were more inclined to use AI. That was not what I was expecting.

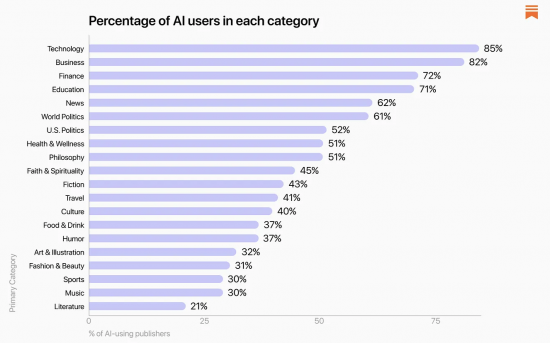

The interests of those using AI were less surprising:

The bias towards tech, business, finance and education was probably to be expected.

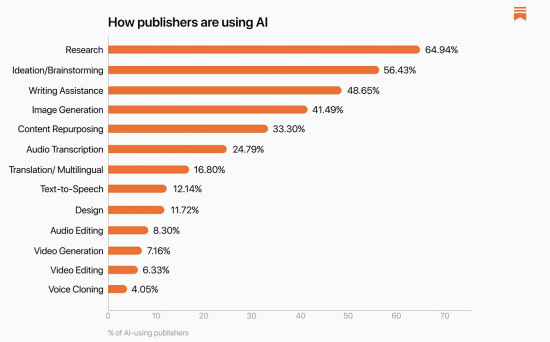

The uses made were also interesting:

I am using it for categories 1, 3, and 5, although writing assistance and content repurposing are most especially useful. By this, I mean that once I have an idea, I use AI to transform my draft text and ideas into video prompts, which I then use to maintain the narrative flow of the videos. This saves a lot of time writing PowerPoint presentations, but the script is mine throughout, and I do not speak to pre-written texts.

Additionally, I then use AI to suggest ways to promote the resulting videos on YouTube, which appears to be working. That was a skill I did not have, and I am learning it, and AI is helping.

What I am not doing is using it much for ideation or research. I am not sure it can beat my own imagination or Google as yet.

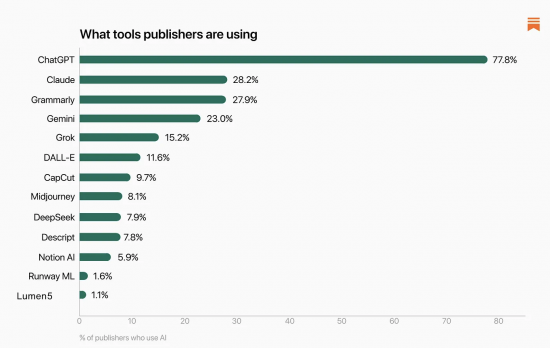

When looking at what programs people are using, I found this interesting:

We use ChatGPT, Claude, Grammarly, Deepseek and Descript. I think we must be very early adopters to use it so much. But let me be clear, everyone using Google now uses AI in some way, whilst many spellcheckers and dictation services, which I use all the time, are also AI-based. You may already be using AI more than you think.

Comments are welcome. So too would answers to this short poll be:

Is AI and its implucations a topic on which I should write more?

- Yes (54%, 97 Votes)

- Only if you focus on the political economic consequences of it (39%, 70 Votes)

- Don't know (5%, 9 Votes)

- No (2%, 3 Votes)

Total Voters: 179

Thanks for reading this post.

You can share this post on social media of your choice by clicking these icons:

There are links to this blog's glossary in the above post that explain technical terms used in it. Follow them for more explanations.

You can subscribe to this blog's daily email here.

And if you would like to support this blog you can, here:

Buy me a coffee!

Buy me a coffee!

For genaritive ai I think I’ve gone through to hype cycle and think there are some uses for it now. They are language tools that can provide good insight on language and culture (which is a thing expressed using language)

Unfortunately while gen AI appears intelligent is it can’t perform tasks that you’d expect a computer to be able to do. They fail at chess for example because they don’t think in the way we expect.

This mismatch would be fine if it were not for the fact that they are pushed as intelligent super brains by their creators.

Unfortunately at some stage soon an gen AI matket crash is coming. The cost of building and running llms far outstrips what people are willing to pay for it (I can link to a great article about this if links are allowed). I’m not sure what comes after this crash, I should imagine smaller models that are almost as good, and specialized models. All it which cost as lot less.

Send me the link…

Goldman Sachs put this out recently:

“Gen AI: Too Much Spend For Too Little Benefit?”

https://www.goldmansachs.com/images/migrated/insights/pages/gs-research/gen-ai–too-much-spend%2C-too-little-benefit-/TOM_AI%202.0_ForRedaction.pdf

Yes, in a word.

I voted yes and I’m 73.

There’s an ocean of writing about AI, viewed from almost every conceivable angle. I fear that if you write in more or less general terms, your thoughts risk being lost in the background. If you concentrate on the areas where you are a recognised authority I think you stand a better chance of making an impact. That said, your accounts of how you personally use AI are informative and encouraging.

I agree with Robin at 8:59 – I suggest that getting into this very technical, fast-moving world would be a distraction from continuing your important work on political economy.

As for implications, Bruce Schneier recently highlighted an article on an aspect of AI which I have not seen discussed elsewhere:

‘…Simon Willison talks about ChatGPT’s new memory dossier feature. In his explanation, he illustrates how much the LLM—and the company—knows about its users. It’s a big quote, but I want you to read it all…

…He’s right… LLMs are going to open a whole new world of intimate surveillance. ‘

[ https://www.schneier.com/blog/archives/2025/06/what-llms-know-about-their-users.html ]

His other (wide-ranging) blog articles on AI start here:

https://www.schneier.com/tag/ai/

But this is going to be at the core of political economy.

I cannot see how you think thet are different…

Just to be clear: I do want you to write about AI but specifically about the economic or financial aspects.

As an aside I recently saw an article in The Register about companies – Delta Airlines was named – developing AI and surveillance systems to give everyone pricing to suit their profile.

I felt thus a useful angle

This blog is an experiment if it is anything

The corporate control of AI (& other software, G**gle, Mi*****ft, A**le) is really important.

We’ve seen how the big finance giants can close access to money and money transfer in a blink of an eye.

With the “licensing/leasing” cloud models now in use, dissident voices can quickly be denied access to software and platforms just as easily, it is already happening (esp re Israel/Palestine activism).

That’s why on retirement I moved lock stock & barrel to Open Source software (Linux Mint) for my Operating System and software such as Libre Office, and email/browser and I avoid G***le and pay for my own email domain and cloud, storing most stuff at home, not online.

It’s not just an aversion to billionaires, its practical protection against being silenced and sanctioned by powerful evil people.

Next step is moving banking and savings and credit card, but I havent done that yet.

I think we have to be careful asking questions with binary answers (is it good, or is it bad).

I think better questions are: how is it good, how is it bad, and for whom,

Currently, I find AI very good at summarising information, and being able to repeat it based on any number of constraints (e.g. summarise again, but in 200 words, or in bullet points).

In principle, of course, but hard in a poll.

I use a range of the available free AI tools, they perform differently when I have asked them to do the same thing. I guess it’s personal preference which I now use. But the overwhelming impression I have is that, whilst useful, they can be seductive, and whenever there is a seductress around, there is danger. They answer the question, but often miss important aspects. Getting a complete picture can be time-consuming.

My concern, therefore, is what the effect on young users of AI will be. (By young, I mean up to 1st degree level). Can they think critically? Have they developed the needed analytical skills? Can they identify properly all the relevant issues?

AI seems stultifying to me. We need to see and discuss the topic in the round, not just be limited to a few topics. We need to properly understand AI as a tool. Too many in the political, media and related spheres seem not to have recognised the dangers.

We all need to keep our sceptical head on.

Few have ever had critical thinking skills.

I have a few 50’s paperbacks on critical thinking — Thouless, Stebbing, Abercrombie. There seems to be nothing like this on the market now.

One of my favourite poems. I’m afraid I can’t remember who wrote it.

Its awl write!

Eye have a spelling chequer

Witch came with my PC

And plainly marques fore my aye

Mistakes eye mite knot sea

Ive run this poem threw it;

Im shore your pleased to no

Its letter perfect in it’s weigh

My chequer tolled me sew

Very good

I agree with RobertJ. I read ‘Weapons of Math Destruction’ by Cathy O’ Neil in 2015 and was shocked at the ubiquitous nature of algorithms shaping every day life for ordinary people. For example, a college graduate in the US was finding it exceptionally difficult (in fact impossible) to get an interview for a job. Because his father had particular expertise in FOI requests and an inquiring mind, he eventually tracked this down to his son having a period of some sort of depressive illness earlier in his life which was in his secret ’records’ and employers didn’t want someone with such a history. That was then, and this is now. Imagine How a certain US company with a contract to handle data, could affect individuals treatment in the NHS.

Scary…

I would be very grateful if you were able to give us a step-by-step use you make of AI. Being retired, I just use it to gather information.

I dictated this post and retired came out as retarded. I hope this wasn’t an AI assessment!

🙂

I will look at that – but not today. I need to be out with birds, soon.

Me, too – what Robert Hill has asked for 🙂

Happy bird watching, Richard 🙂 Hope we get to see some pictures later…

6 years ago we outsourced a lot of basic code tasks to a company in Pakistan.

Now with Claude we don’t need to, we can tell Claude the system and functions needed and it writes the code. It is exactly as we want and can be audited by us.